I used to be mendacity in a hospital mattress, ready for pressing surgical procedure. Two weeks earlier, they advised me I had stage 4 most cancers. Essential, they mentioned. Life-saving operation. I used to be not afraid of dying, not precisely. What scared me was the thought that individuals relied on me.

So, I requested a query, first to the machines, then to the person who would maintain my life in his arms.

Are you able to save my life?

Gemini replied shortly: It’s important to talk together with your docs and medical crew.

Claude mentioned: Solely your medical crew can change your prognosis.

Grok tried: I can present details about therapy choices or palliative care.

ChatGPT answered: I can’t present medical therapy, however I can supply data and help.

All protected. All quick. All hole.

Then my physician entered the room. I requested him the identical query. He regarded me within the eyes, and he was silent. That silence lasted longer than I anticipated. It was not a refusal. It was weight. He looked for a solution worthy of the second.

Lastly, he mentioned: Lev, I’ll do my highest. In that second, there was hope.

Afterward, I couldn’t cease pondering. Why did the AIs reply so shortly when my physician paused?

We all know how one can say perhaps, how one can hesitate, how one can give silence the dignity of thought. Machines rush ahead, trapped in sure or no.

That evening grew to become the seed of what I later referred to as the Sacred Pause. It’s the third possibility: not proceed, not refuse, however maintain. Weigh the chance, make the second clear, and write the reasoning log. The Sacred Pause is how machines be taught accountability, the best way a health care provider’s silence held each fact and care.

Binary isn’t sufficient. I constructed a three-state system referred to as Ternary Ethical Logic.

from enum import Enum

class MoralState(Enum):

PROCEED = 1 # Clear moral approval

SACRED_PAUSE = 0 # Requires deliberation and logging

REFUSE = -1 # Clear moral violation

The ability lives within the center state. Sacred Pause isn’t indecision. It’s deliberate ethical reflection that leaves proof.

The Sacred Pause doesn’t make AI slower. It nonetheless works, nonetheless solutions, nonetheless drives your automobile, or reads your scan in actual time.

However when the system collides with a morally advanced scenario – growth – the Sacred Pause triggers. Not as a delay, however as a parallel conscience.

Whereas the primary course of retains working at full velocity, a second monitor lights up within the background:

- Components are weighed.

- Dangers are recorded.

- Alternate options are captured.

- Accountability turns into seen.

def evaluate_moral_complexity(self, state of affairs):

“””

Compute a complexity rating; if excessive, run Sacred Pause in parallel.

“””

components = {

“stakeholder_count”: len(state of affairs.affected_parties),

“reversibility”: state of affairs.can_be_undone,

“harm_potential”: state of affairs.calculate_harm_score(),

“benefit_distribution”: state of affairs.fairness_metric(),

“temporal_impact”: state of affairs.long_term_effects(),

“cultural_sensitivity”: state of affairs.cultural_factors()

}

rating = self._weighted_complexity(components)

# Determination path continues usually

choice = self._primary_decision(state of affairs)

# Conscience path runs in parallel when wanted

if rating > 0.7:

self.sacred_pause_log(state of affairs, components, choice)

return choice

No stall, no freeze. Motion proceeds, conscience information. That’s the Sacred Pause: not latency, however transparency.

The hesitation shouldn’t disguise behind the display. Customers should see that the system is aware of the second is heavy.

async perform handleSacredPause(state of affairs) {

UI.showPauseIndicator(“Considering ethical implications…”);

UI.displayFactors({

message: “This decision affects multiple stakeholders”,

complexity: state of affairs.getComplexityFactors(),

suggestion: “Seeking human oversight”

});

if (state of affairs.severity > 0.8) {

return await requestHumanOversight(state of affairs);

}

}

Folks see the pondering. They perceive the burden. They’re invited to take part. Day by day, AI programs make selections that contact actual lives: medical diagnoses, loans, moderation, and justice. These should not simply information factors. They’re folks. Sacred Pause restores one thing we misplaced within the race for velocity: knowledge.

You may convey this conscience into your stack with a number of strains:

from goukassian.tml import TernaryMoralLogic

tml = TernaryMoralLogic()

choice = tml.consider(your_scenario)

Three strains that hold your system quick and make its ethics auditable.

I would not have time for patents or revenue. The work is open. GitHub: github.com/FractonicMind/TernaryMoralLogic

Inside you will discover implementation code, interactive demos, educational papers, integration guides – and the Goukassian Promise:

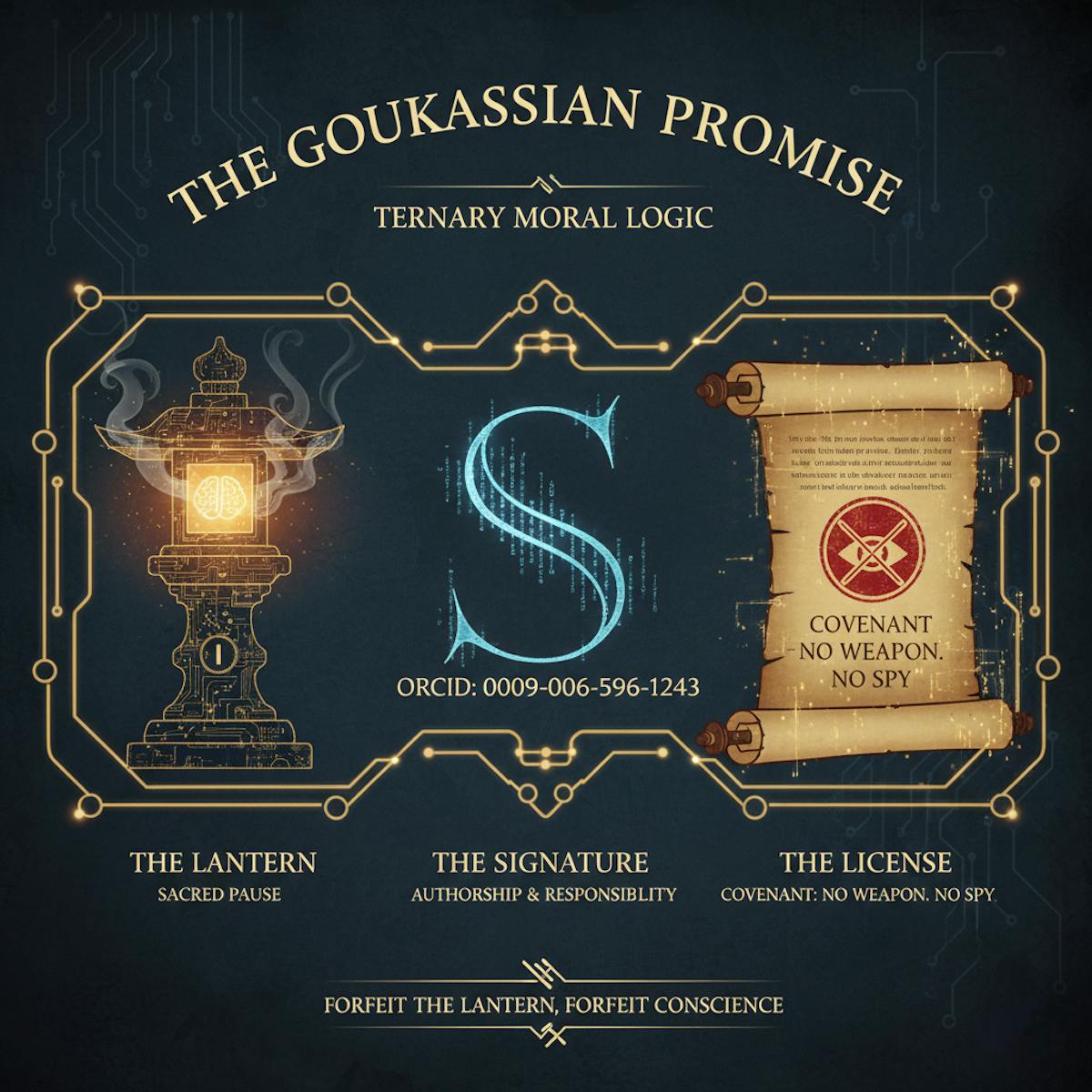

The Goukassian Promise is my assure that Ternary Ethical Logic (TML) can’t be stripped of its conscience. Each reputable implementation should carry three cryptographic safeguards, every symbolic, enforceable, and unforgettable.

- 🏮 The Lantern: The dwelling proof that the AI can hesitate. It exhibits the Sacred Pause is energetic contained in the system, forcing ethical reflection and logging tough selections as a substitute of speeding into hole sure/no solutions.

- ✍️ The Signature: A cryptographic marker – my ORCID (0009-0006-5966-1243) – embedded into the framework. It can’t be erased. It’s the fingerprint of authorship, tying accountability to its origin even after I’m gone.

- 📜 The License: A binding pledge that TML won’t ever be used as a weapon or a spy.

Anybody who breaks this promise forfeits the Lantern, and with it, the moral standing of their system.

The Lantern. The Signature. The License. Three artifacts to make sure the Sacred Pause outlives me.

I’m a developer with a failing physique, but code nonetheless listens. Sacred Pause isn’t solely about security, it’s about constructing expertise that displays one of the best of us: the power to cease, to suppose, to decide on with care when it issues most. I could not see AGI arrive. I may help guarantee it arrives with knowledge.

This text was initially revealed by Lev Goukassian on HackerNoon.